- Products & services Products & services

- Resources ResourcesLearning

- Learning

- Identity University Get technical training to ensure a successful implementation

- Training paths Follow a role-based or product-based training path

- SailPoint professional certifications & credentials Advance your career or validate your identity security knowledge

- Training onboarding guide Make of the most of training with our step-by-step guide

- Training FAQs Find answers to common training questions

- Community Community

- Compass

- :

- Discuss

- :

- Community Wiki

- :

- IdentityIQ Wiki

- :

- Provisioning to a JDBC Source via an external jar

- Article History

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Content to Moderator

Provisioning to a JDBC Source via an external jar

Provisioning to a JDBC Source via an external jar

Disclaimer: Some information in this article may be outdated, please verify details by referring to latest resources or reach out to our technical teams.

Introduction

In an effort to better enable both partners and customers, we have outlined a process that allows technically savvy customers the ability to make modifications to their JDBC provisioning rule without directly engaging IdentityNow's Expert Services team. We do not recommend this approach if you do not have the correct technical resources on hand with at least an intermediate level knowledge of Java.

Prerequisites

- A functional JDBC source that is aggregating accounts into IdentityNow successfully.

- A JDBC Driver jar attached to the Source Config. (see Required JDBC Driver JAR Files)

- Eclipse or another IDE able to import Maven projects.

- The 'identityiq.jar' to import into your IDE. (Attached at the bottom).

- Intermediate knowledge of Java.

- Understanding of Account and Attribute requests within an IdentityNow provisioning plan.

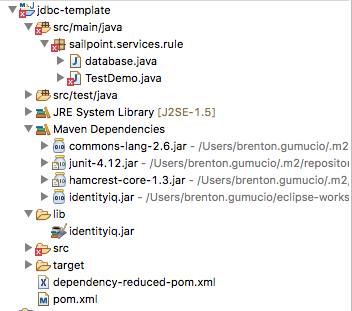

Elements of the project

- JDBC Rule Adapter- This is the rule that will still be needed to be uploaded to your org by Expert Services or Professional Services. Essentially, this rule calls a 'provision' method in an attached jar and all the logic is built there. Getting passed into the method is the application, the connection to the database, the plan, and an *optional* log file. An example is attached below.

- Primary Java class- This is the main class that we will be using in our example. This is essentially the tunneling and logic portion of the code. It is the home of the 'provision' method, receives the account and attribute requests and directs the request to the appropriate calls in the auxiliary class in the project. (In the attached project, this is TestDemo.java)

- Auxiliary Java class- This is the class that executes stored procedures or (in this projects case) prepared statements to the target source and contains the methods that are called from the Primary Java class. In this project, it is called database.java

Building a project structure

Within Eclipse, or any other IDE, your file structure should be as follows:

Make sure that you have imported the 'identityiq.jar' and you can see it in your Maven dependencies.

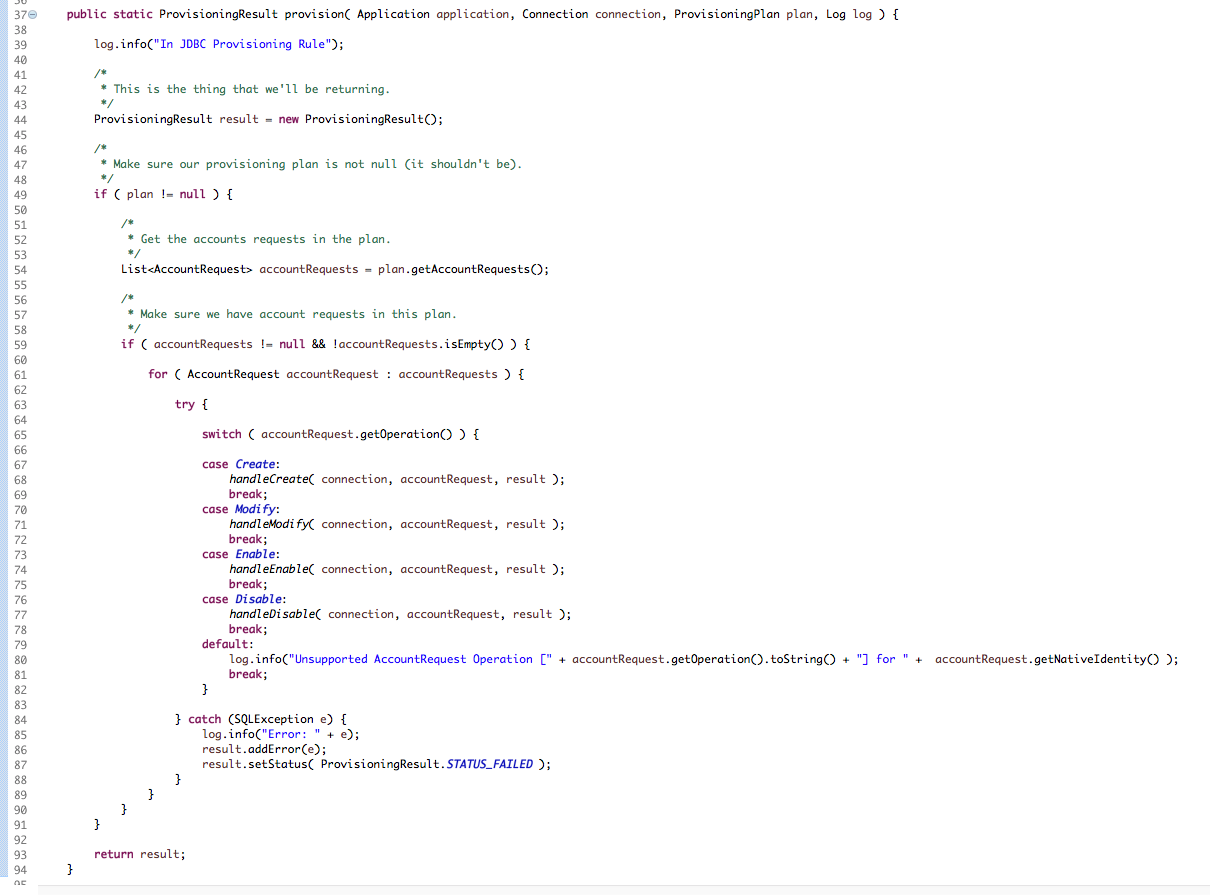

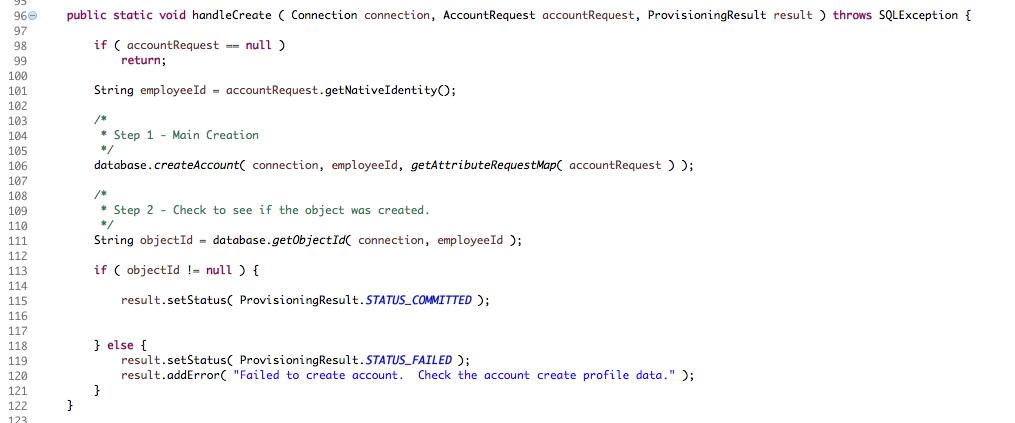

Elements of the Primary Java class

This is the foundation that handles our project. Every request initially comes here and then calls other methods.

- Provision Method- This breaks down the request and calls the appropriate method for the operation:

- Operation methods- These exist for every operation (Create, Modify, Enable, Disable).

- Now that a request has come in and we have defined it's type, we need to call the Auxiliary Java class in our project.

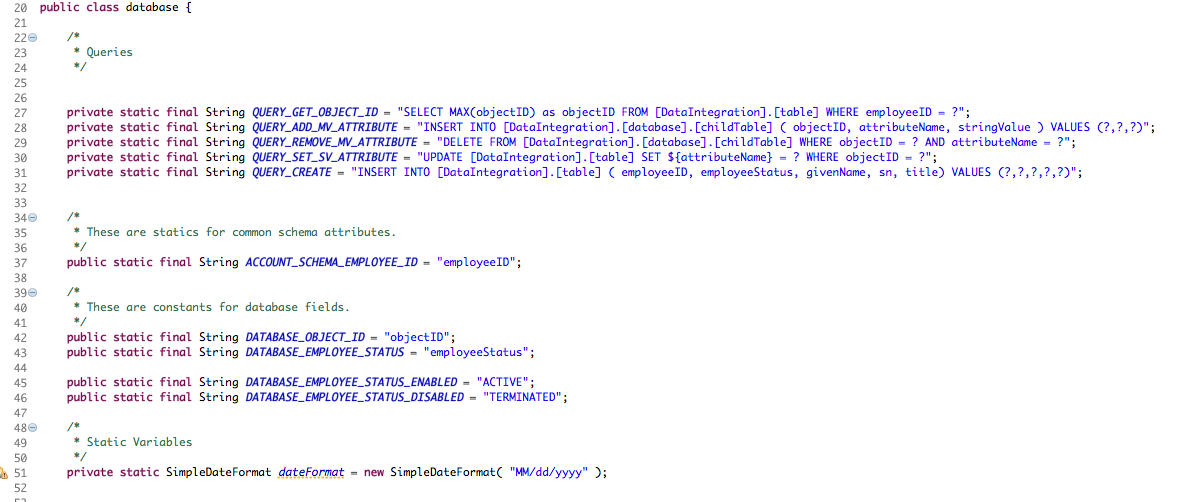

Elements of the Auxiliary Java class

- Static Final strings- We create both public and private final strings in order to streamline interacting with the database. This may include the query string and/or constants in the project.

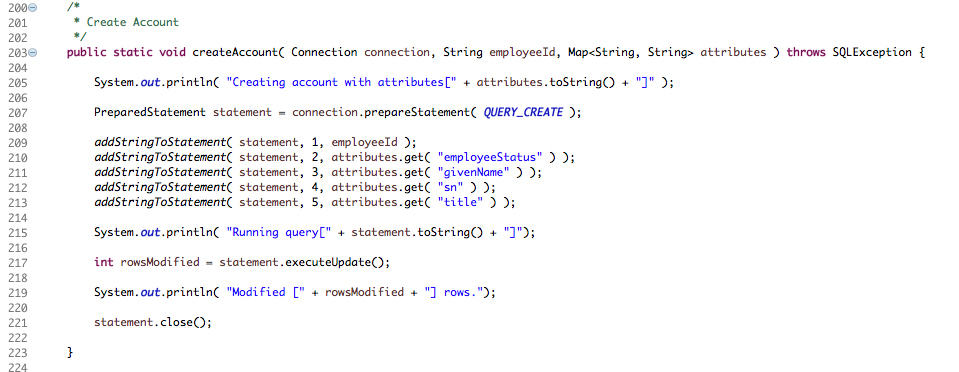

- Working methods- These are the working methods in the class. We have received the request, determined where it should go, and now need to execute it. Notice that it's simply a prepared statement that calls a final string

Clean Compile Package

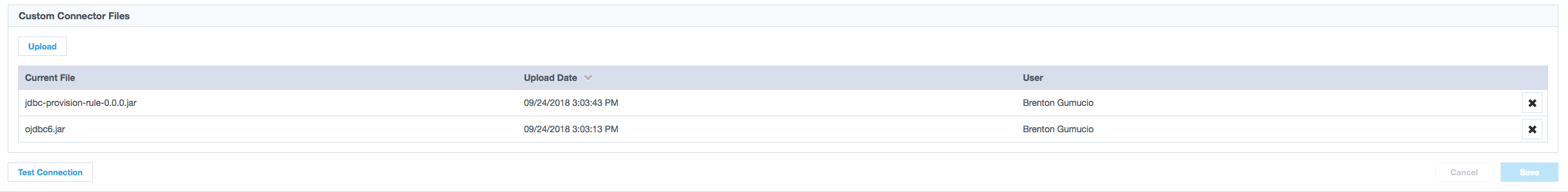

The next step is to compile your Maven package. Upload the jar that is created to the source config.

Final Notes:

JDBC Rules can be very complicated depending on the source you are trying to connect to. We STRONGLY recommend that if you have any questions, bring them up to the Expert Services team for assistance.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Content to Moderator

could you please share a example java class file with stored procedures and CallableStatements .

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Content to Moderator

Hello to all

where can i put the jar?

The next step is to compile your Maven package.

Upload the jar that is created to the source config.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Content to Moderator

You can upload your jar in source through upload option

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Content to Moderator

I am getting below error. Any idea what might be the issue?

{"exception":{"stacktrace":"java.security.PrivilegedActionException: org.apache.bsf.BSFException: The application script threw an exception: java.lang.NullPointerException BSF info: JDBC Provisioning Rule Adapter at line: 0 column: columnNo\n\tat java.security.AccessController.doPrivileged(Native Method)\n\tat org.apache.bsf.BSFManager.eval(BSFManager.java:442)\n\tat sailpoint.server.BSFRuleRunner.eval(BSFRuleRunner.java:233)\n\tat sailpoint.server.BSFRuleRunner.runRule(BSFRuleRunner.java:203)\n\tat sailpoint.server.InternalContext.runRule(InternalContext.java:1229)\n\tat sailpoint.server.InternalContext.runRule(InternalContext.java:1201)\n\tat sailpoint.connector.DefaultConnectorServices.runRule(DefaultConnectorServices.java:96)\n\tat sailpoint.connector.DefaultConnectorServices.runRule(DefaultConnectorServices.java:126)\n\tat sailpoint.connector.CollectorServices.runRule(CollectorServices.java:1004)\n\tat sailpoint.connector.JDBCConnector.handleJDBCOperations(JDBCConnector.java:944)\n\tat sailpoint.connector.JDBCConnector.provision(JDBCConnector.java:810)\n\tat sailpoint.connector.ConnectorProxy.provision(ConnectorProxy.java:1079)\n\tat com.sailpoint.ccg.cloud.container.Container.provision(Container.java:286)\n\tat com.sailpoint.ccg.cloud.container.ContainerIntegration.provision(ContainerIntegration.java:156)\n\tat com.sailpoint.ccg.handler.ProvisionHandler.invoke(ProvisionHandler.java:183)\n\tat sailpoint.gateway.accessiq.CcgPipelineMessageHandler.handleMessage(CcgPipelineMessageHandler.java:26)\n\tat com.sailpoint.pipeline.server.PipelineServer$InboundQueueListener$MessageHandler.run(PipelineServer.java:369)\n\tat java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)\n\tat java.util.concurrent.FutureTask.run(FutureTask.java:266)\n\tat java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)\n\tat java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)\n\tat java.lang.Thread.run(Thread.java:748)\nCaused by: org.apache.bsf.BSFException: The application script threw an exception: java.lang.NullPointerException BSF info: JDBC Provisioning Rule Adapter at line: 0 column: columnNo\n\tat bsh.util.BeanShellBSFEngine.eval(BeanShellBSFEngine.java:193)\n\tat org.apache.bsf.BSFManager$5.run(BSFManager.java:445)\n\t... 22 more\n"}

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Content to Moderator

Is it possible to share the rule code.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Content to Moderator

Not much sure about this

a)If possible ,can you try putting the system.out.println at each line to check where the flow is reaching

d) Did you try running the rule from iiq console

rule "JDBC Provisioning Rule Adapter"

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Content to Moderator

@manoj_caisucar I was able to figure out the issue after using Syso. The issue was in the code and I have corrected it now. Strangelt log.debug/log.info was not printing in ccg.log. Any idea how to configure logger the custom logger in IdentityNow?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Content to Moderator

Its good to know the root cause.

Did you check the response from rahul in earlier thread if it helps

import openconnector.Log;

Log _log = LogFactory.getLog("customJDBCLog");

And import the same in jar. It helps me to print log in ccg.log file.

I am able to print log in ccg using log.info, log.debug etc.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Content to Moderator

@manoj_caisucar I had used apache logger in rule as well as custom code and the rule is already uploaded to our tenant.

import org.apache.commons.logging.Log;

import org.apache.commons.logging.LogFactory;

Log _log = LogFactory.getLog("customJDBCLog");

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Content to Moderator

You can use System.out.println in the jar file and it will write your output to the CCG logs.